Facebook rushed to apologize on Friday after it labeled black men as “primates”. A Facebook spokesperson told The New York Times, which first reported the story, that it was a “clearly unacceptable error” of its auto-generated recommendation system and said the software involved was disabled.

“We apologize to anyone who may have seen these offensive recommendations,” Facebook – whose senior management team is completely white – said in response to an AFP inquiry. “We disabled the entire topic recommendation feature as soon as we realized this was happening so we could investigate the cause and prevent this from happening again.”

Facebook users in recent days who watched a British tabloid video featuring Black men were show an auto-generated prompt asking if they would like to “keep seeing videos about Primates?”

A screen capture of the recommendation was shared on Twitter by former Facebook content design manager Darci Groves, who said a friend sent her a screenshot of the video in question with the company’s auto-generated prompt. The video, dated 27 June 2020, was posted by UK’s Daily Mail.

It contained clips of two separate incidents, which appear to take place in the US. One shows a group of black men arguing with a white individual on a road in Connecticut, while the other shows several black men arguing with white police officers in Indiana before being detained. “This ‘keep seeing’ prompt is unacceptable,” Groves tweeted, aiming the message at former colleagues at Facebook. “This is egregious.”

Um. This “keep seeing” prompt is unacceptable, @Facebook. And despite the video being more than a year old, a friend got this prompt yesterday. Friends at FB, please escalate. This is egregious. pic.twitter.com/vEHdnvF8ui

— Darci Groves (@tweetsbydarci) September 2, 2021

In response, a Facebook product manager said the company was “looking into the root cause”. The company later said the recommendation software involved had been disabled.

“We disabled the entire topic recommendation feature as soon as we realised this was happening so we could investigate the cause and prevent this from happening again,” a spokesperson was cited by The New York Times as saying.

Twitter users were split in their response to the AI-generated Facebook prompts. Some were shocked at how the platforms continued to fail addressing the issue.

Again?! How did we not learn this after Google?! They have a much larger data set, are they not training it any better?! Argh

— Bulbul Gupta (@bulbulnyc) September 4, 2021

And to think, this is the same AI that Zuck says will replace human content moderators…

— Chelsea 🙌🏻 (@thatchelseagirl) September 3, 2021

Others proceeded to directly accuse Facebook of being racist, incompetent and evil.

They’re apologizing because their AI tagged Black men as primates,

even though they won’t rewrite the system? They keep making it obvious that the whites are coding and training the AI to be as racist as they are.

— TaryntheRealtor (@TaryntheR) September 4, 2021

Facebook is equal parts incompetent and evil.

— Chilly Freezesteak (@freezesteak) September 4, 2021

A third group saw nothing wrong with the AI algo that triggered the outrage.

This doesn’t happen because Facebook or any other tech company is racist. It happens because AI still isn’t very “smart”. I do this for a living and the tech is getting better, but it’s not there yet, which is why I still don’t own an auto driving vehicle.

— anton (@perchedfalcon) September 4, 2021

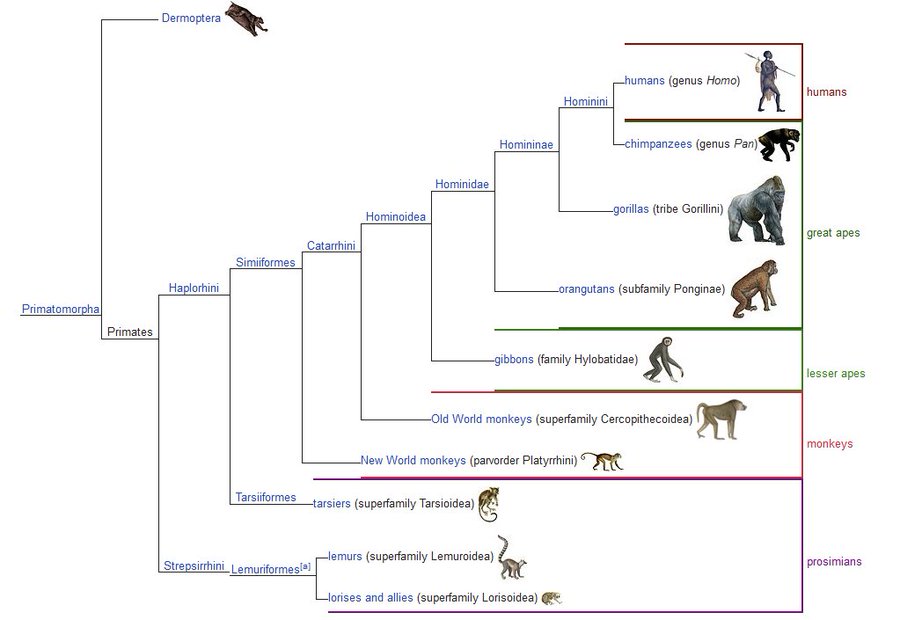

Humans are primates biologicaly. So what’s wrong on it? pic.twitter.com/NWPwYjPa9b

— David Michal (@DavidMi52138016) September 4, 2021

This is not the first time facial recognition software and “racist” AI has gotten in trouble. The latest AI fiasco comes as tech companies have come under fire for perceived biases displayed by their artificial intelligence software, despite the companies’ solemn pledges to the woke cause. Last year, Twitter investigated whether its automatic image cropper may be racially biased against black people as it selected which part of a picture to preview in tweets.

A faculty member has been asking how to stop Zoom from removing his head when he uses a virtual background. We suggested the usual plain background, good lighting etc, but it didn’t work. I was in a meeting with him today when I realized why it was happening.

— Colin Madland, PhD(c) (@colinmadland) September 19, 2020

In 2015, Google’s algorithm reportedly tagged two Black people’s faces with the word “gorilla”, prompting the company to say it was “genuinely sorry that this happened,” in a statement to The Wall Street Journal.

Read More